Running ollama in Google Colab

What is ollama

OIlama is a tool that streamlines the local usage of running LLMs. It sets up dependencies of individual models, manages system requirements and optimises the model configurations in the background.

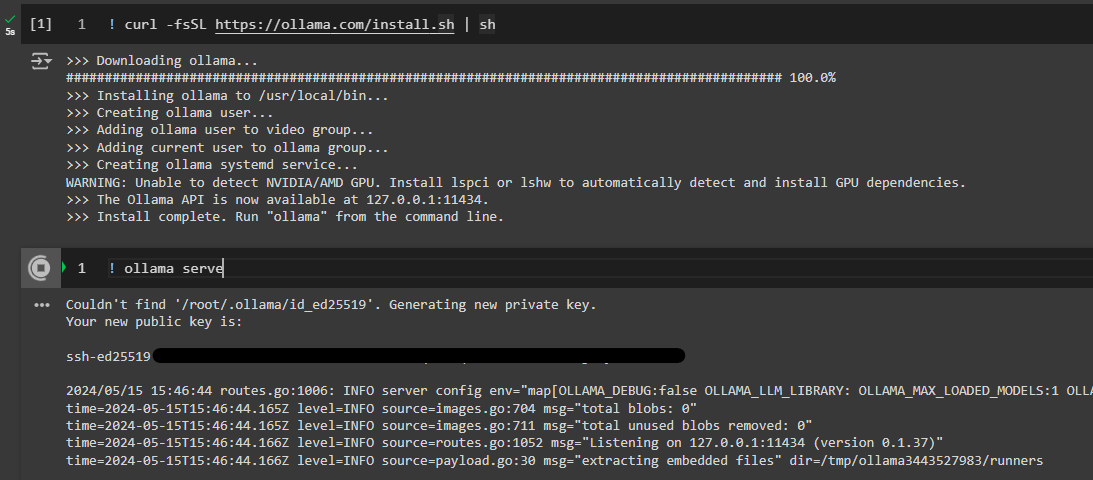

To make these optimisations ollama needPython to have either their desktop tool or ollama serve running in a side terminal. Once it is being served like this, the model can be downloaded locallyneed using ollama pull <model_name> and interacted with in Python.

What breaks down in Colab?

Free Google Colab doesn't let you run them serve as it will block your notebook and you won't be able to access the models while this is running.

ollama serve command will run but will never complete.

Solution 1: Deploying Locally or in a separate VM

You can also deploy ollama in a separate VM and access it in various ways by using services like ngrok. Here's a great thread on Stack Overflow that explains it. However, you'll need to set up your ngrok account and get your key to be able to use it.

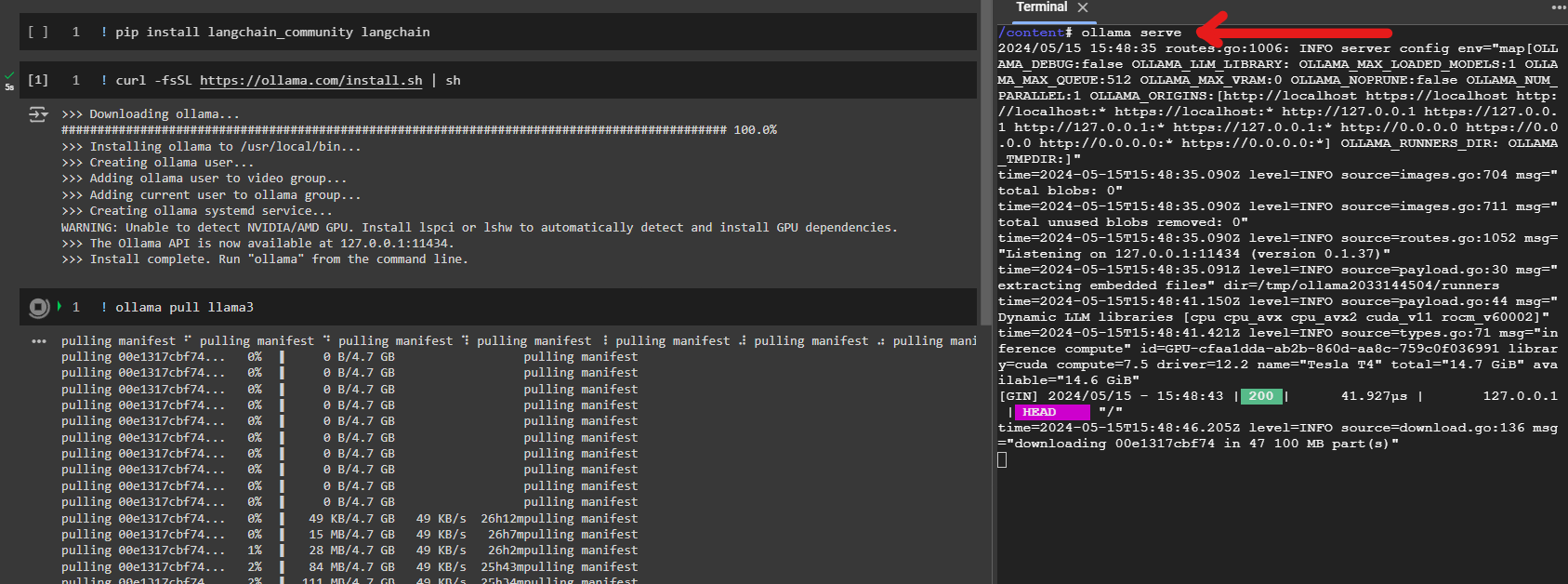

Solution 2: Using the Colab Terminal

The other option is to use the Colab terminal to serve ollama and use the Python API from the notebook. Terminal is only available in the Pro version so you need to purchase to use this option.

Once ollama serve is running in the terminal, you can pull the models you want and use any module (such as langchain_community) in Python to run the models.